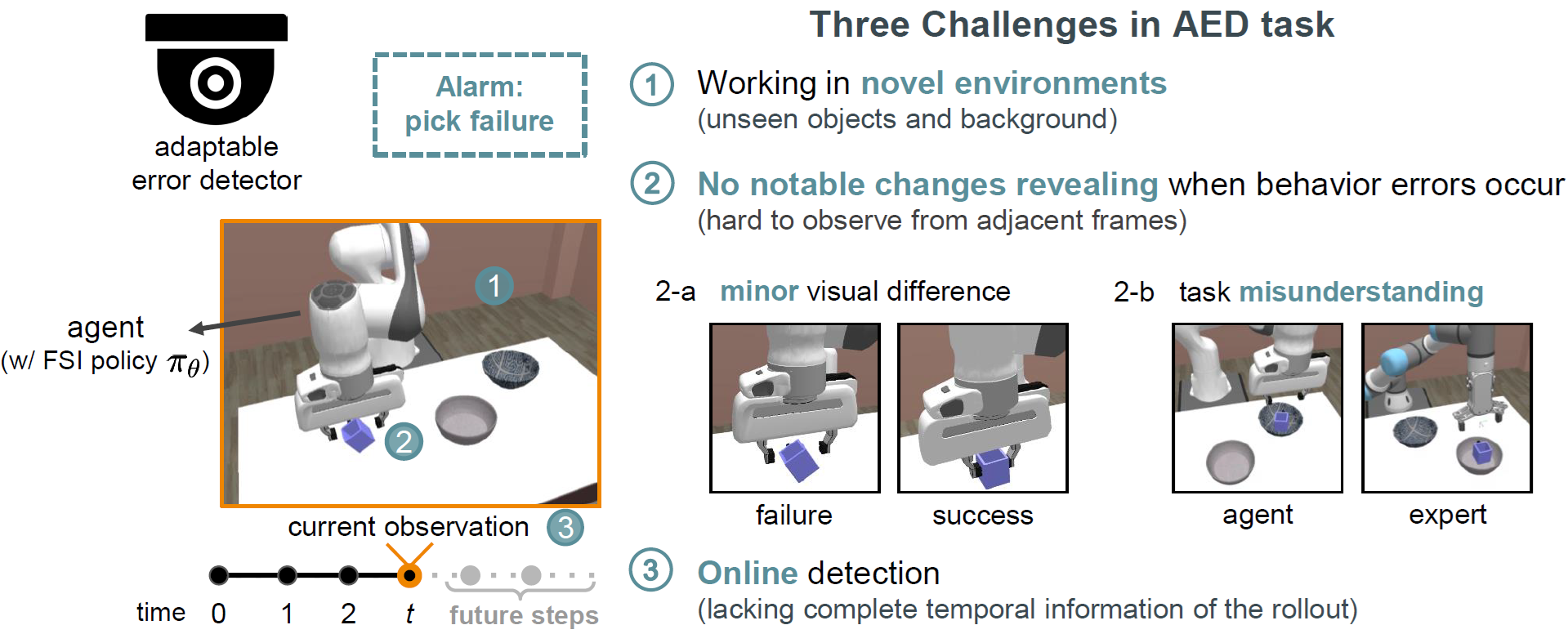

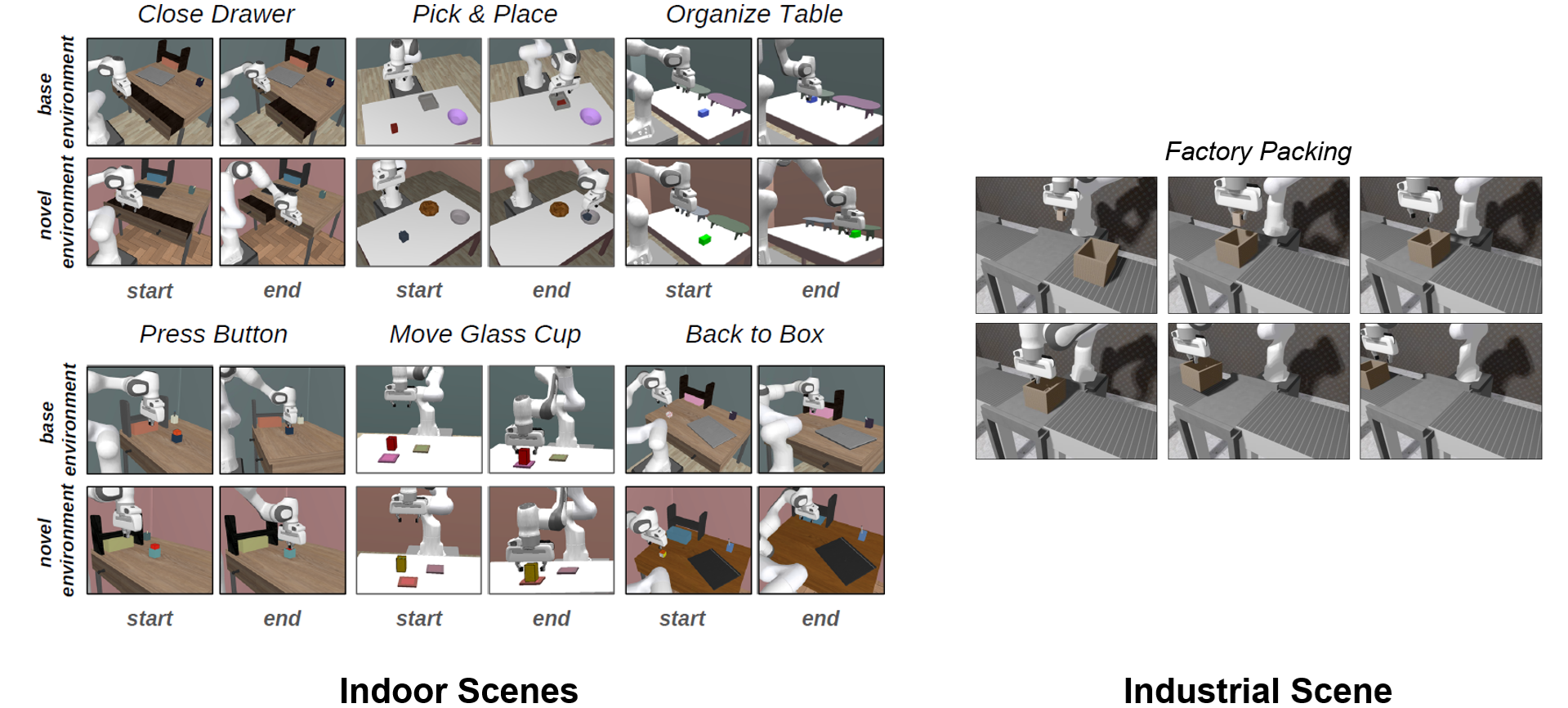

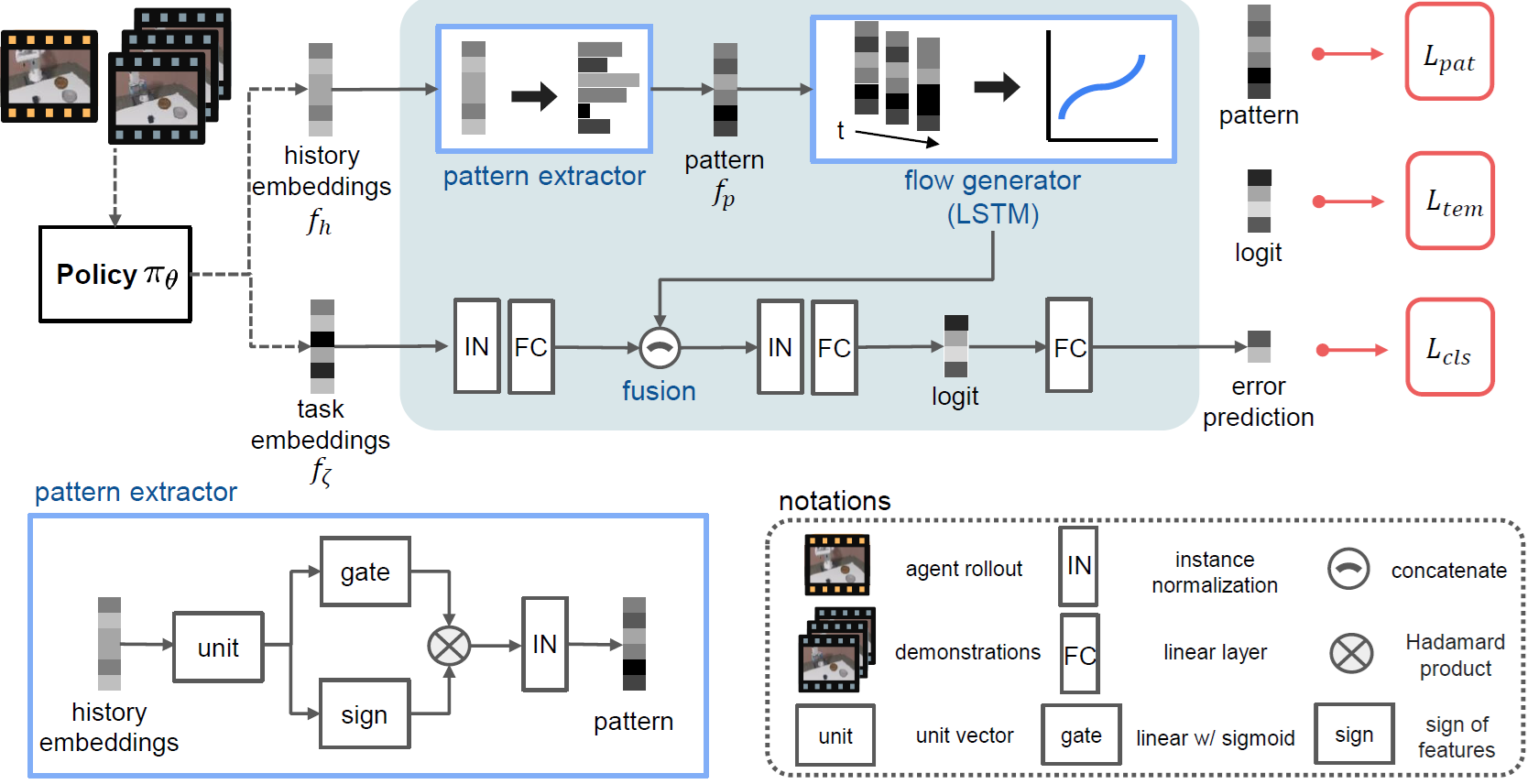

We introduce a new task called Adaptable Error Detection (AED), which aims to identify behavior errors in few-shot imitation (FSI) policies based on visual observations in novel environments. The potential to cause serious damage to surrounding areas limits the application of FSI policies in real-world scenarios. Thus, a robust system is necessary to notify operators when FSI policies are inconsistent with the intent of demonstrations. This task introduces three challenges: (1) detecting behavior errors in novel environments, (2) identifying behavior errors that occur without revealing notable changes, and (3) lacking complete temporal information of the rollout due to the necessity of online detection. However, the existing benchmarks cannot support the development of AED because their tasks do not present all these challenges. To this end, we develop a cross-domain AED benchmark, consisting of 322 base and 153 novel environments. Additionally, we propose Pattern Observer (PrObe) to address these challenges. PrObe is equipped with a powerful pattern extractor and guided by novel learning objectives to parse discernible patterns in the policy feature representations of normal or error states. Through our comprehensive evaluation, PrObe demonstrates superior capability to detect errors arising from a wide range of FSI policies, consistently surpassing strong baselines. Moreover, we conduct detailed ablations and a pilot study on error correction to validate the effectiveness of the proposed architecture design and the practicality of the AED task, respectively.

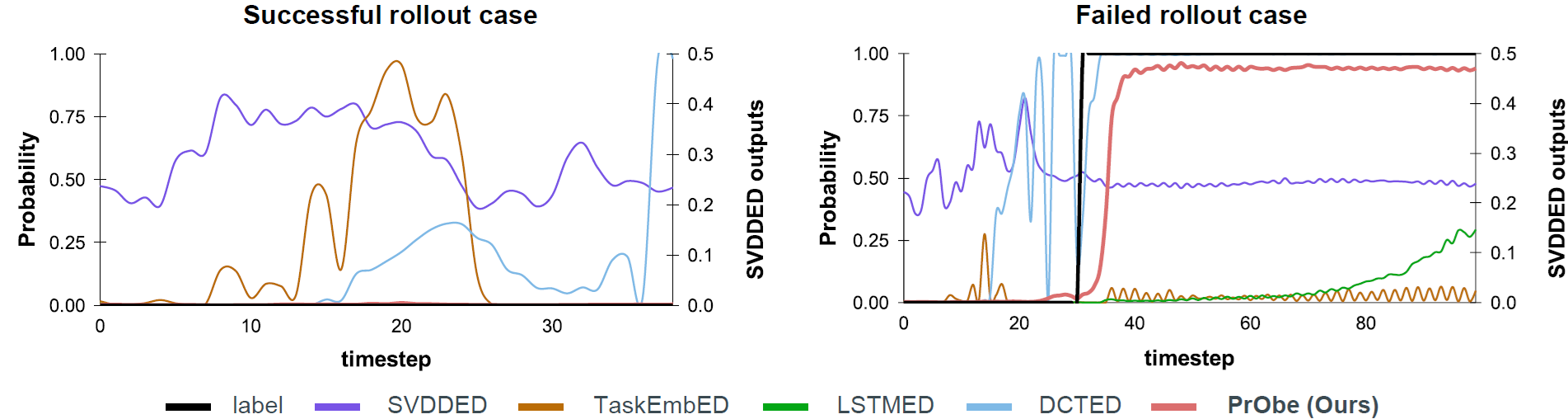

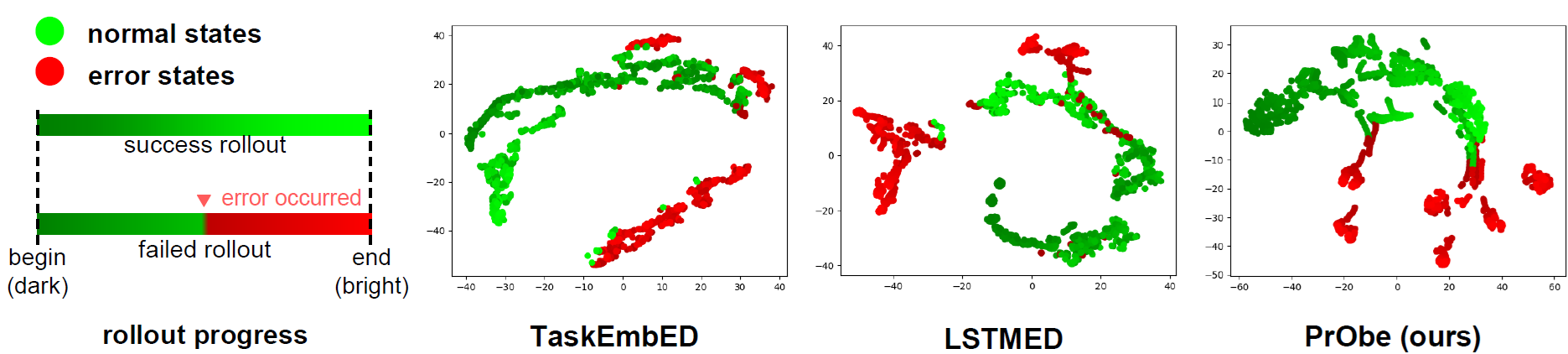

Few-shot imitation (FSI) policies have achieved notable breakthroughs in recent studies. However, their potential to cause serious damage in real-world scenarios limits broader applications. A robust system is essential to notify operators when FSI policies deviate from the intended behavior illustrated in demonstrations. Thus, we formulate the Adaptable Error Detection (AED) task, which presents three key challenges: (1) monitoring policies in unseen environments, (2) detecting erroneous behaviors that may not cause significant visual changes, and (3) performing online detection to terminate the policy in a timely manner. These challenges render existing error or anomaly detection methods inadequate for addressing the AED task.

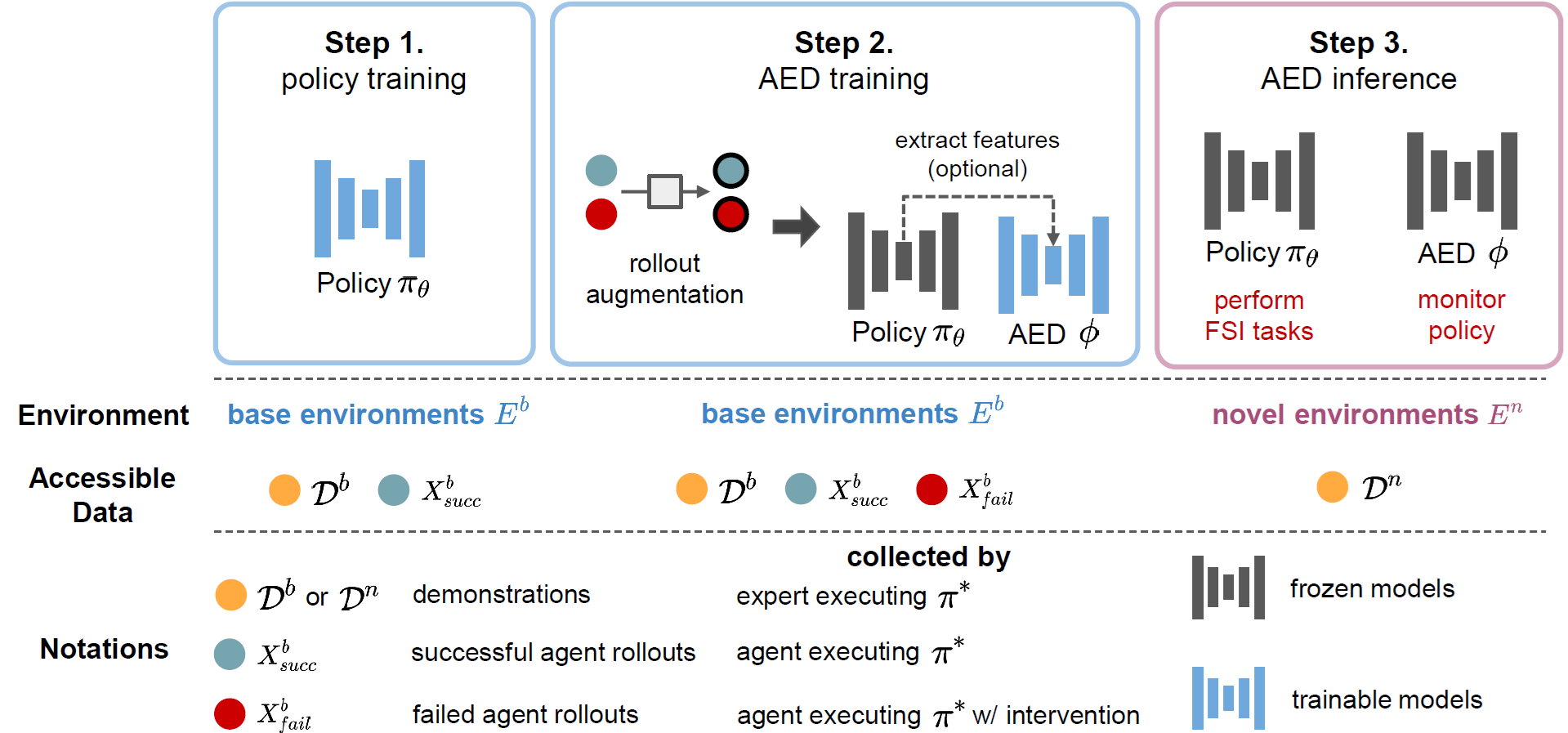

In this work, we design a practical AED framework tailored to the nature of few-shot imitation (FSI) policies. The framework comprises three stages: (1) FSI policies are trained in base environments using successful agent rollouts and expert demonstrations, (2) an error detector is trained to distinguish between states from successful and failed agent rollouts by referencing expert demonstrations, also in the base environments. The error detector can also access the policy's internal knowledge if necessary, and (3) the policy is deployed in novel (unseen) environments, where the error detector monitors the policy's behavior, issuing an alert when erroneous actions occur.

@inproceedings{yeh2024aed,

title={AED: Adaptable Error Detection for Few-shot Imitation Policy},

author={Jia-Fong Yeh and Kuo-Han Hung and Pang-Chi Lo and Chi-Ming Chung and Tsung-Han Wu and Hung-Ting Su and Yi-Ting Chen and Winston H. Hsu},

booktitle={The Thirty-eighth Annual Conference on Neural Information Processing Systems (NeurIPS)},

year={2024}

}